Cinematography in Virtual Production: Capturing Story Through the Lens

“I don’t really believe in the mystery of cinematography, what happens in the camera is what the cinematographers create and all that nonsense, I want the director to see what I’m trying to do.” — Roger Deakins, ASC

We’ve been lucky to work with some of the most prolific cinematographers in film, and want to share some of what we’ve learned when applying it to an Unreal Engine pipeline. Some of those cinematographers we admire include: Greig Fraser, Barry Baz Idoine, Bill Pope, David Klein, Eric Steelberg, Quyen Tran, and Dean Cundey.

Cinematography is where art meets precision; the point where a story becomes an image. Whether captured on a digital sensor or rendered in Unreal Engine, everything we do in filmmaking traces back to how we control light, lens, and motion. It’s the language that transforms scripts into emotions on screen, and the camera department is the translator.

Greig Fraser framing a shot on The Mandalorian Season 1, against a large LED screen

The Camera Department: Where Vision Becomes Image

Cinematography is more than exposure and focus; it’s a collaboration. The camera department works in sync with lighting, grip, and art teams to shape what the audience feels, not just what they see.

Key Roles

Cinematographer (Director of Photography)

The DP defines the visual language of the film, interpreting story through light, lens, and movement. Working closely with the director, they guide tone and emotion.

They collaborate with the Gaffer and Key Grip to create lighting plots, which the Virtual Art Department (VAD) can translate through digital equivalents like color temperature controls and virtual fixtures.

Camera Operators

The hands behind the lens. They physically frame and capture the story, working from the DP’s direction. While virtual teams may not interact with them directly, they play a crucial role in in-Camera VFX shots (otherwise known as final-pixel environment shots).

Assistant Camera (1st and 2nd AC)

The 1st AC builds the camera and pulls focus. In a virtual or hybrid setup, they liaise with the Virtual Art Department to confirm lens data, tracker placement, and filmback details.

The 2nd AC slates and manages take logs, vital when syncing takes or capturing metadata in virtual workflows.

The slate helps match sound and video in editing by making a clear "clap" noise. It also shows details like scene number, take number, and production title to keep footage organized.

Supporting Roles that Shape the Image

Gaffer

The Gaffer leads the lighting department, executing the DP’s plan. They determine the light types, color temperatures, and modifiers, details that guide both physical and digital lighting setups.

Key Grip

Grips are the unsung engineers of motion and stability. Under the Key Grip, the team manages all camera and lighting support systems, from rigging mounts to platforms, enabling the DP’s vision safely and efficiently.

Script Supervisor

Though not part of the camera team, the Script Supervisor ensures continuity; tracking props, eyelines, and scene direction. Their notes are invaluable for virtual set turnarounds and scene synchronization.

However, no changes should be made based solely on their notes without alignment with the Assistant Director (AD), who confirms with key creatives before adjustments are made.

Cameras, Lenses, and Everything Between

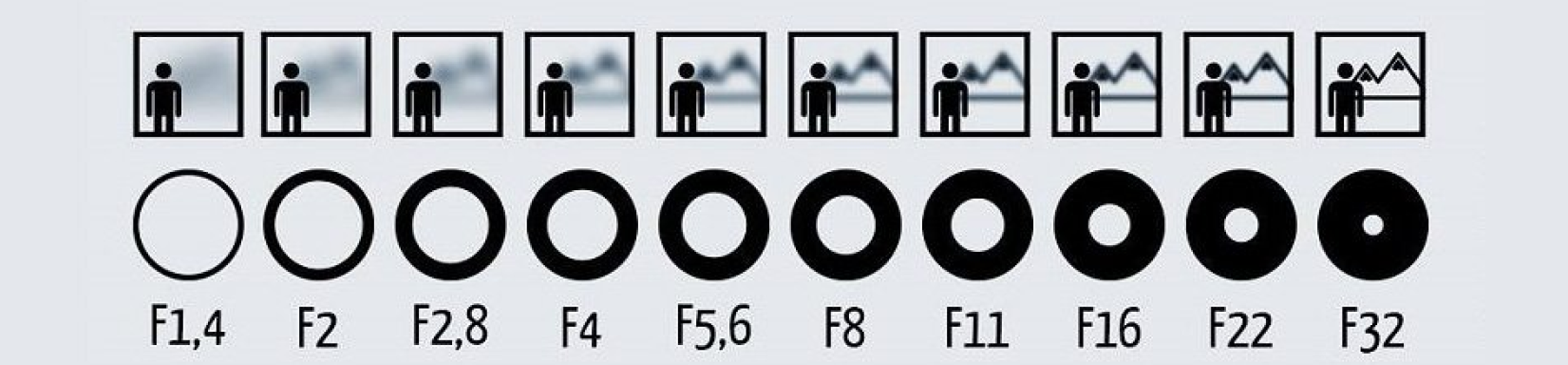

Aperture is the opening in a camera lens that controls the amount of light entering, measured by f-stop numbers. This makes what isnt in focus blurry when wide and sharp when narrow

So cameras are complicated. The goal of this guide is to highlight the key variables we manipulate in Unreal Engine to get proper lensing for the key creatives on a project.

Behind every cinematic image lies a set of variables that define its look: sensor size, crop factor, aspect ratio, lens type, and dynamic range.

Various settings for the CineCamera Actor in Unreal Engine. adjusting these can simulate a real camera.

Here is a somewhat standard CineCamera Actor in Unreal. Per project, we will receive a camera spec from the cinematographer, from which we can find the film settings. They will usually tell us a model and the aspect ratio, and whether or not it is anamorphic.

Specific settings for the CineCamera Actor in Unreal Engine, showing the setup for a certain Arri Alexa camera

Various sensors for different cameras, based on pixels

Various sensors for different cameras, based on the size of the physical sensor measured in millimeters

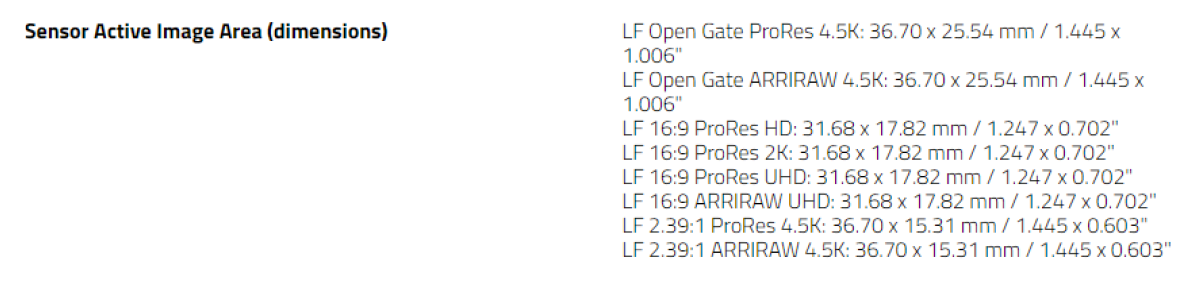

Here is a screengrab from Arri’s website showing the various sensor sizes. These have two dimensions, pixels and mm, of which we mostly care about the mm. The key thing to know is the aspect ratio, whether it is Open Gate, 16:9 or 2:39:1 in this example.

Diagram showing the field of view for various lenses, ranging from 15mm (ultrawide angle) to 600mm (super telephoto)

Sensor Size & Crop Factor

Every camera’s sensor determines how much of the image the lens captures. The crop factor describes how much smaller (or larger) a sensor is compared to full-frame 35mm film. It affects both field of view and depth of field; a 50mm lens on a smaller sensor will appear more “zoomed in” than on full-frame.

Image showing the different sensor types: Full Frame (blue) and cropped (yellow & red)

This diagram visually explains the difference in field of view between a full-frame (35mm) sensor and a cropped sensor when using the same lens and focal length.

Spherical vs. Anamorphic Lenses

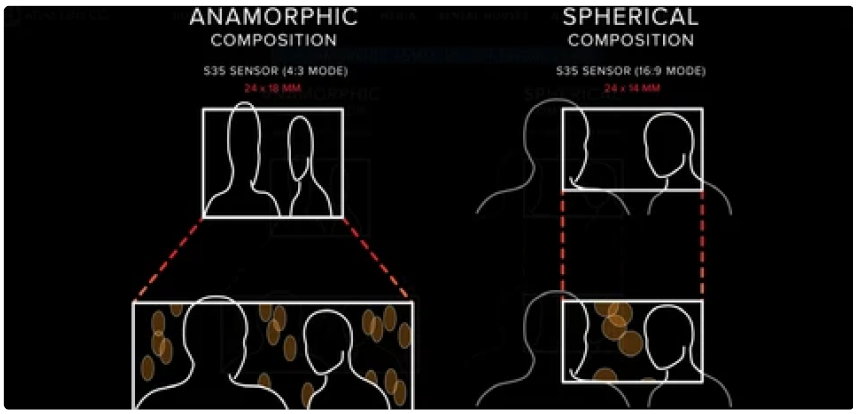

Anamorphic lenses were born from a challenge; how to make widescreen cinema feel immersive without changing the film stock. By horizontally compressing the image, they capture more width and richer bokeh.

Today, digital anamorphics recreate that same visual poetry; flares, oval bokeh, and edge distortion that lend texture and imperfection to pristine digital imagery.

An anamorphic lens and the resulting bokeh

Comparison of how anamorphic lenses are recorded vs. spherical lenses, and their resulting bokeh

A still frame of the film ‘Lawrence of Arabia’, which used anamorphic lenses

Dynamic Range

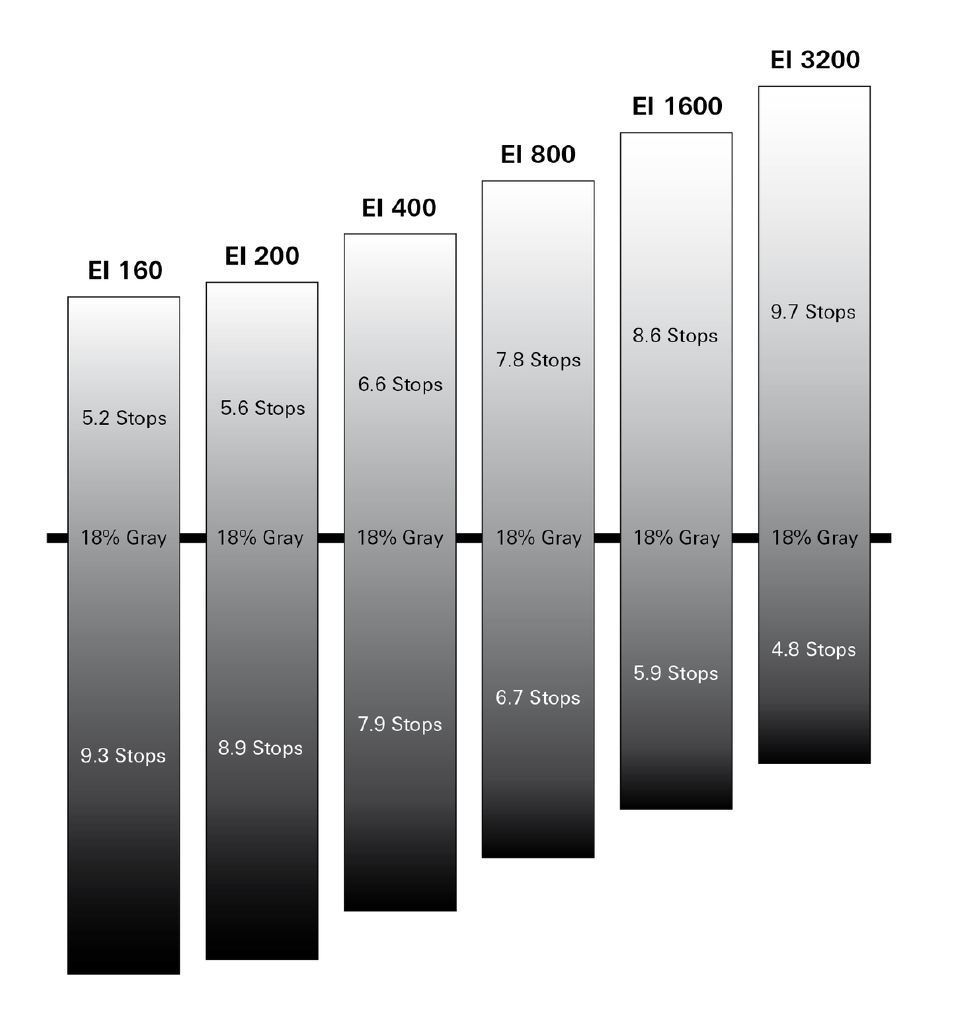

Dynamic range measures how much contrast a camera can capture, from the darkest shadows to the brightest highlights.

Cinema cameras often capture 11–16 stops of dynamic range, which allows the DP and colorist to shape tone and depth in post. In Unreal Engine, replicating this means balancing exposure, post-process settings, and light falloff to mimic natural light behavior.

Image from: https://vfxcamdb.com/filmdigital-back-101/

Bridging the Physical and Virtual Worlds

In Virtual Production, cinematography is still about storytelling; but now, the tools are both real and digital. The Virtual Art Department (VAD) collaborates with the DP to simulate lensing, lighting, and color response inside Unreal Engine before a single frame is shot.

Every choice, from sensor specs to lens distortion, can be replicated virtually to match the physical camera package on set. This ensures that when light hits the LED volume or a CG render, it behaves exactly as it would in reality.

In the End, It’s Still About the Story

Technology evolves — from film to digital, from camera rigs to real-time render engines — but the cinematographer’s mission never changes: to make the audience feel something through the frame.

Cinematography is collaboration, experimentation, and craft. Whether you’re holding a camera or designing light inside Unreal Engine, the goal remains the same; capture frames in motion.